GPGPU cluster

The first unit represents a high-performance computing cluster based on graphic processors, which in the past period have become the primary means of processing big data and artificial intelligence (Big data, Artificial Intelligence).

- GPGPU Cluster

- 2 x GPGPU nodes

- Model: Dell PowerEdge XE8545

- CPUs: 2x AMD EPYC 7413 24-Core Processor

- Memory: 1 TB

- OS: Ubuntu

- 4x NVidia HGX A100 80 GB GPGPU

- NVLink Redstone

- SLURM cluster management

- Job submission

- Instructions in next presentation

Openstack cloud

The second unit is a cloud computing platform that will provide fast and simple access to computational resources for researchers.

Openstack cloud

- 3 x OpenStack Cloud Controllers

- Model: Dell PowerEdge R650xs

- CPU: 1 x 20 core Intel(R) Xeon(R) Silver 4316 CPU @ 2.30GHz

- Memory: 128 GB

- OS: Ubuntu

- 10 x OpenStack Compute node

- Model: Dell PowerEdge 750

- CPU: 2 x 32 core Intel(R) Xeon(R) Gold 6338 CPU @ 2.00GHz

- Memory: 1.5 TB

- OS: Ubuntu

- Total

- Computing power: 640 cores

- Memory: 15 TB

Data storage

The third unit will represent a large data warehouse, which is integrated with the previous two units, and in which researchers have the opportunity to store their data sets. Also within the data storage space, a repository of open data sets (open datasets) has been established where researchers can publicly and openly publish data sets and results, which will increase their visibility and the possibility of collaboration and implementation the postulates of open science.

- Data storage nodes

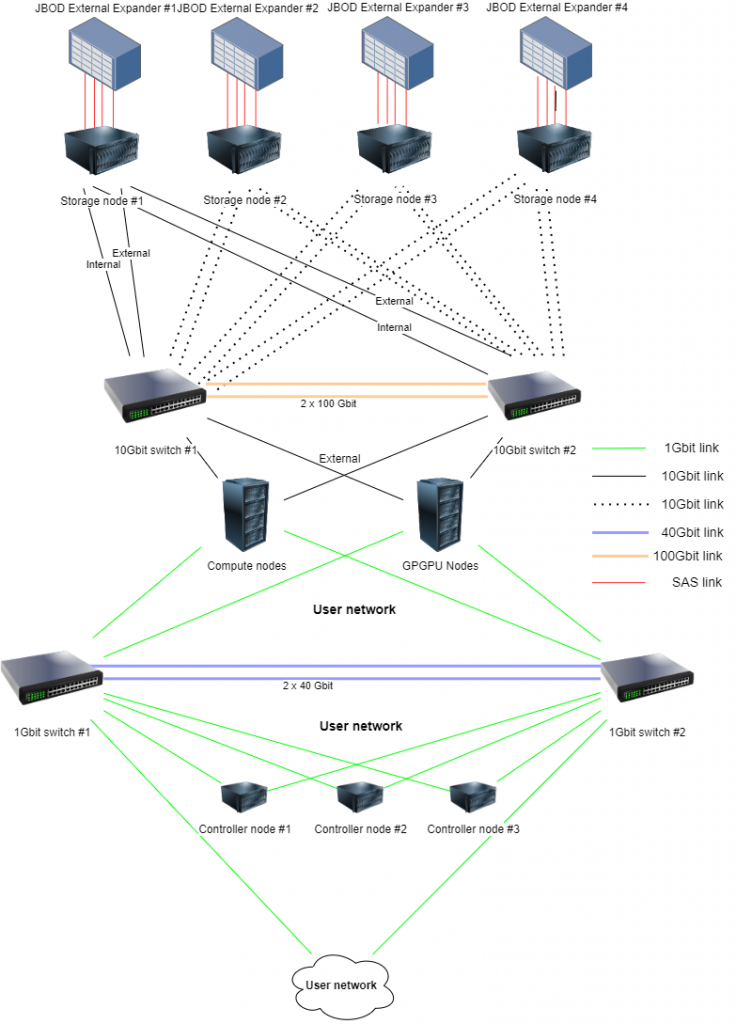

- 4 x Ceph Storage nodes

- Model: Dell PowerEdge R750xs

- CPUs: 2x 20 core Intel(R) Xeon(R) Silver 4316 CPU @ 2.30GHz

- Memory: 512 GB

- OS: Ubuntu

- Storage:

- 2x 480 GB SSD (OS)

- 2x 960 GB mixe use SSD

- 4x 400GB write intensive SSD

- 4 x JBOD External Expander

- SAS Disk Enclosures with a capacity of 84 disks

- SAS, SATA and SSD intermix allowed

Interconnection

- 2 x 48–port 10 Gbit switches interconnected with two 100 Gbit connections – Cloud/Storage network

- 2 x 48-port 1 Gbit switches interconnected with two 40 Gbit connections – External network